Backup Storage: Best Practices

When planning your backup storage for Catalogic vStor, keep the following guidelines in mind:

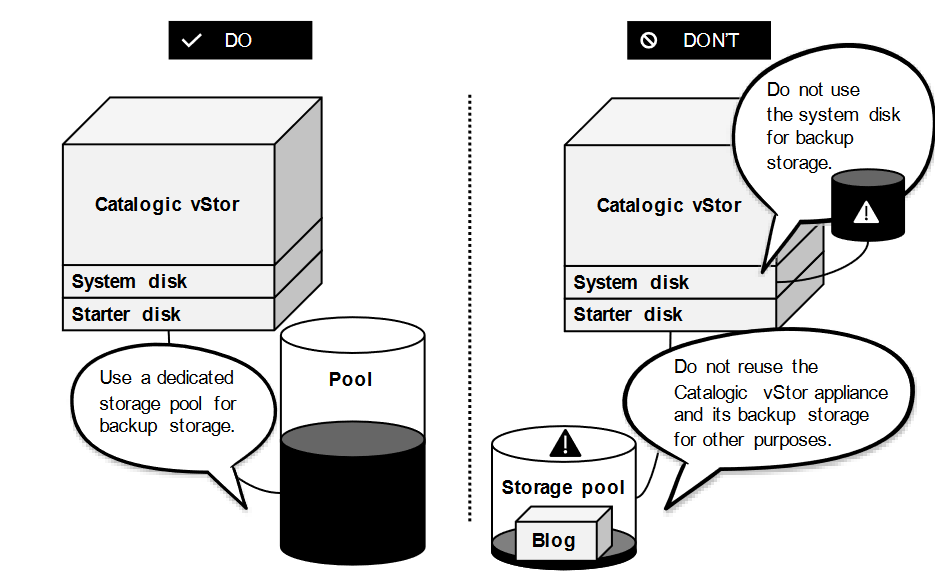

Never create a backup storage pool on the System Disk of the Catalogic vStor appliance.

The backup storage pool should not be used for other purposes, such as installing applications.

Account for client fan-in, retention periods, backup frequencies, data growth, and minimum required free space thresholds when determining the size of the destination pool's volume. For capacity estimates, contact Catalogic Sales Engineers and see Planning hardware configurations.

Utilize a 10 Gigabit Ethernet (10GbE) connection between Catalogic vStor and storage devices.

Opt for reliable storage configurations, like RAID 5 with hot-spare disks, for the primary storage of Catalogic vStor.

Do not run defragmentation utilities on any disk of Catalogic vStor.

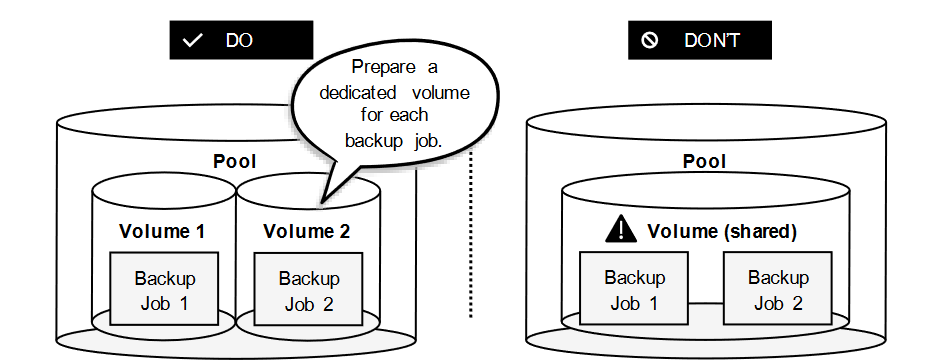

Use one volume for one backup job to avoid complexity and potential issues.

Storage Pools

The management of storage pools in Catalogic vStor can vary:

Often, a single storage pool is sufficient and easiest to manage.

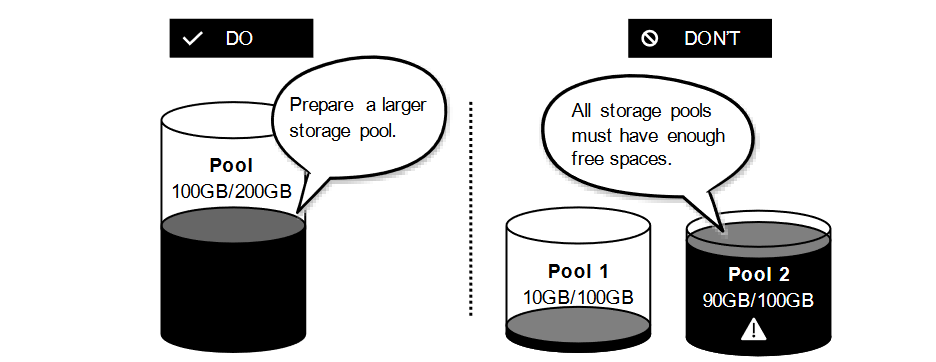

In scenarios requiring different disk types or sizes, or for more flexibility in data segregation and storage tiering, multiple storage pools may be beneficial. This approach, however, divides free space across pools.

When creating a storage pool, provision multiple logical disks to enhance RAID performance. For detailed guidance, see Managing Storage Pools in Catalogic vStor.

Disk Spaces

Effective management of disk spaces in Catalogic vStor involves:

Monitoring: Regularly monitor disk usage to maintain ample free space. Disk usage can be checked via the HTML5-based vStor Management Interface under Storage.

Favor fewer large backup storage pools over many smaller ones to prevent the fragmentation of free space. Catalogic DPX checks for adequate free space before and during backup jobs, with default thresholds set at 30% to start and 20% to continue. Insufficient space will stop a backup job and trigger an error.

All logical volumes are part of a ZFS pool, which dictates the capacity and free space distribution.

While short-term iSCSI restore operations like Instant Access (IA) mapping typically use minimal space, long-term operations such as Rapid Return to Production (RRP) restores or Full Virtualization for large data sets may significantly consume space in the volume containing the LUN.

Local storage

Review the following tips about attaching local storage devices to Catalogic vStor:

Utilize direct attached storage (DAS) for local storage. Expand Catalogic vStor’s capacity by attaching raw disk volumes, such as VMDKs or other block storage devices.

For the Catalogic vStor virtual appliance, opt for VMDKs with physical raw device mappings (pRDMs) instead of standard VMFS datastore VMDKs for better performance.

When using Catalogic vStor on Linux servers, incorporate additional disks into the system, ensuring they are recognized by the Linux operating system.

Network storage

Establish connections between Catalogic vStor and network storage using the Fibre Channel Protocol for optimal performance and reliability. If choosing iSCSI, connect via a dedicated network interface to mitigate complexity and potential disruptions that may occur during network outages. Implement NFS storage by setting up an NFS datastore and associated VMDKs, following VMware's Best Practices For Running NFS with VMware vSphere.

Compression best practice

Data compression in Catalogic vStor is enabled by default. Manage this setting for each pool via the Storage section of the vStor Management Interface.

Verify that the system is equipped with sufficient resources to support the extra load caused by data compression.

VMware Agentless Protection jobs are more prone to failure when compression is enabled.

Deduplication best practice

Data deduplication or dedup is not enabled by default. Consider enabling it in specific scenarios:

Catalogic DPX's backup technology inherently reduces data redundancy by only backing up new or changed data blocks. Deduplication may be unnecessary unless there is significant data duplication within a single source or across multiple sources.

Deduplication is resource-intensive. Ensure that there is ample memory, cache storage, and CPU power available to maintain performance levels. Insufficient resources can lead to longer snapshot removal times and decreased write speeds.

Last updated